Revolutionizing Epilepsy Care: AI-Driven Seizure Detection and Classification for Interpretable Real-Time Patient Support

Alex Kogan# - Department of Information Systems

Prof. Anna Zamansky - Department of Information Systems

Prof. Ilan Shimshoni - Department of Information Systems

Dr. Ilan Goldberg - Beilinson Hospital

Dr. Felix Benninger - Beilinson Hospital

Deep Learning

Health

Privacy

SEED Grant 2024

Did you know that one-third of epilepsy patients experience seizures that current diagnostic tools struggle to detect and classify accurately?

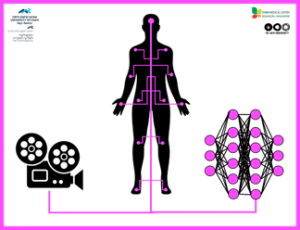

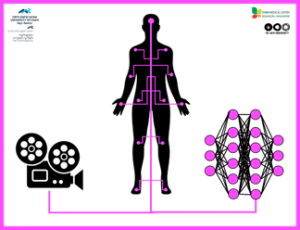

In our research, we are developing an AI-powered system designed to improve epilepsy diagnosis and management through interpretable computer vision. Epilepsy affects millions globally, and traditional diagnostic methods, such as the electroencephalogram (EEG), can be limited, especially in under-resourced settings. While many machine learning models have been proposed for analyzing epilepsy-related data, video-based seizure analysis remains a major challenge. To date, there are no widely used, reliable tools for automatic seizure detection and classification from video. Our approach focuses on analyzing patient behavior directly from clinical video recordings, using deep learning techniques to detect and classify seizures in real time. A key feature of our system is its interpretability, we aim not only to automate diagnosis but also to provide clear visual and data-driven explanations for the system’s decisions. This transparency is crucial for building trust in AI-assisted medical tools and empowering clinicians to make informed decisions. By reducing the burden of manual video review and making seizure diagnostics more accessible and explainable, we support both medical professionals and patients, offering a non-invasive, real-time solution that enhances healthcare outcomes.

Our research involves a collaboration with the Neurology Department at Beilinson Hospital, where we analyze annotated seizure video data. Using advanced deep learning techniques, we extract patient-specific features such as body, face, hand, and leg bounding boxes, as well as pose and hand landmarks. These data points are processed with dimensionality reduction methods like t-SNE and SVD, then used to train a CNN-RNN ensemble model that identifies and classifies seizures in real-time. This framework is unique in its ability to differentiate between all epileptic seizure types and psychogenic non-epileptic seizures (PNES), providing interpretable, accurate results.

We work with a dedicated team, including Dr. Ilan Goldberg and Dr. Felix Benninger – the medical experts from Beilinson Hospital, and advisors, Prof. Anna Zamansky and Prof. Ilan Shimshoni. Our collaboration has been essential for accurately annotating seizure data and ensuring the clinical relevance of our AI models.

This research aims to provide an innovative non-invasive tool that supports clinicians in diagnosing and managing epilepsy more efficiently. By automating seizure detection, classification and interpretation, this system could improve patient care and reduce clinician workload. Although the project is still in progress, we have already achieved impressive accuracy rates with our current models. We are preparing our findings for publication, and are excited to continue refining the system for clinical use.

Did you know that one-third of epilepsy patients experience seizures that current diagnostic tools struggle to detect and classify accurately?

In our research, we are developing an AI-powered system designed to improve epilepsy diagnosis and management through interpretable computer vision. Epilepsy affects millions globally, and traditional diagnostic methods, such as the electroencephalogram (EEG), can be limited, especially in under-resourced settings. While many machine learning models have been proposed for analyzing epilepsy-related data, video-based seizure analysis remains a major challenge. To date, there are no widely used, reliable tools for automatic seizure detection and classification from video. Our approach focuses on analyzing patient behavior directly from clinical video recordings, using deep learning techniques to detect and classify seizures in real time. A key feature of our system is its interpretability, we aim not only to automate diagnosis but also to provide clear visual and data-driven explanations for the system’s decisions. This transparency is crucial for building trust in AI-assisted medical tools and empowering clinicians to make informed decisions. By reducing the burden of manual video review and making seizure diagnostics more accessible and explainable, we support both medical professionals and patients, offering a non-invasive, real-time solution that enhances healthcare outcomes.

Our research involves a collaboration with the Neurology Department at Beilinson Hospital, where we analyze annotated seizure video data. Using advanced deep learning techniques, we extract patient-specific features such as body, face, hand, and leg bounding boxes, as well as pose and hand landmarks. These data points are processed with dimensionality reduction methods like t-SNE and SVD, then used to train a CNN-RNN ensemble model that identifies and classifies seizures in real-time. This framework is unique in its ability to differentiate between all epileptic seizure types and psychogenic non-epileptic seizures (PNES), providing interpretable, accurate results.

We work with a dedicated team, including Dr. Ilan Goldberg and Dr. Felix Benninger – the medical experts from Beilinson Hospital, and advisors, Prof. Anna Zamansky and Prof. Ilan Shimshoni. Our collaboration has been essential for accurately annotating seizure data and ensuring the clinical relevance of our AI models.

This research aims to provide an innovative non-invasive tool that supports clinicians in diagnosing and managing epilepsy more efficiently. By automating seizure detection, classification and interpretation, this system could improve patient care and reduce clinician workload. Although the project is still in progress, we have already achieved impressive accuracy rates with our current models. We are preparing our findings for publication, and are excited to continue refining the system for clinical use.